Chapter 5: Technical manual

| Line 1: | Line 1: | ||

| − | === | + | ===Introduction=== |

The goal of this manual is to provide some practical considerations for collecting linguistic data from fieldwork, and ideas for making this data accessible, from a technical/ICT point of view. Examples are drawn from our past experiences with the SAND project. | The goal of this manual is to provide some practical considerations for collecting linguistic data from fieldwork, and ideas for making this data accessible, from a technical/ICT point of view. Examples are drawn from our past experiences with the SAND project. | ||

| − | === | + | ===General recommendations=== |

| − | ===2.1 Transcriptions=== | + | ====2.1 Transcriptions==== |

Assumption: the raw data we are starting out with is dialectical speech (sound). The data we need to use in a computer for searching, tagging, etc., is text. So the first thing we need to consider are best practices for transcription of the sound into text. | Assumption: the raw data we are starting out with is dialectical speech (sound). The data we need to use in a computer for searching, tagging, etc., is text. So the first thing we need to consider are best practices for transcription of the sound into text. | ||

| Line 16: | Line 16: | ||

* Rely as much as possible on constraints which are enforced by your software, not on presumed accuracy of the transcripters. People always make unpredictable mistakes, and different people will interpret guidelines in different ways. | * Rely as much as possible on constraints which are enforced by your software, not on presumed accuracy of the transcripters. People always make unpredictable mistakes, and different people will interpret guidelines in different ways. | ||

| − | ===2.2 Tokenizing=== | + | ====2.2 Tokenizing==== |

The smallest parts of transcriptions as described in par. 2 are generally larger than single words: speech is usually transcribed to sentences, or fragments of a certain maximum length (10 seconds in the SAND, for example). But if you want to attach metadata (e.g. lemmata, POS-tagging) to individual words it is necessary to split the fragments from your transcription program into individual words. And this is an important reason to keep transcription protocols simple and to keep metadata separate from transcriptions: you want, basically, to be able to split your text on whitespace, disregard punctuation and end up with a list of tokens; encoded metadata in the text (which should be disregarded or handled in a special way while tokenizing) is an extra complication and increases the possibility of errors. | The smallest parts of transcriptions as described in par. 2 are generally larger than single words: speech is usually transcribed to sentences, or fragments of a certain maximum length (10 seconds in the SAND, for example). But if you want to attach metadata (e.g. lemmata, POS-tagging) to individual words it is necessary to split the fragments from your transcription program into individual words. And this is an important reason to keep transcription protocols simple and to keep metadata separate from transcriptions: you want, basically, to be able to split your text on whitespace, disregard punctuation and end up with a list of tokens; encoded metadata in the text (which should be disregarded or handled in a special way while tokenizing) is an extra complication and increases the possibility of errors. | ||

Revision as of 17:10, 2 November 2011

Contents |

Introduction

The goal of this manual is to provide some practical considerations for collecting linguistic data from fieldwork, and ideas for making this data accessible, from a technical/ICT point of view. Examples are drawn from our past experiences with the SAND project.

General recommendations

2.1 Transcriptions

Assumption: the raw data we are starting out with is dialectical speech (sound). The data we need to use in a computer for searching, tagging, etc., is text. So the first thing we need to consider are best practices for transcription of the sound into text.

- Keep transcription protocols as simple as possible, but not simpler (more complications means a greater chance for errors).

- Use open and standard formats (plain text in a standard character encoding).

- Use a real transcription program, preferably one that saves its output as XML, because XML can be processed with widely available standard libraries. Also, a good transcription program will establish a link between your transcription and the audio (the specific slice of the audio), which is necessary if you want to go back from your transcripts to the audio later, and it will take care of things like keeping different speakers separate, etc.

- If you plan to use some kind of automatic tagging program, chances are that it needs its input in a specific format. If your transcripts are XML, you can use XML processing libraries to make converting your transcriptions to whatever format the tagging application needs, a lot easier.

- Try not to be too creative with your transcription program: use its features in the intended way. Be careful with interspersing your transcriptions with metadata: keep metadata and data separate. Otherwise you end up with parts of the transcription which have possibly unclear 'special' meanings, requiring extra effort to process, and which cannot be checked for consistency by the program. See also the next point.

- Rely as much as possible on constraints which are enforced by your software, not on presumed accuracy of the transcripters. People always make unpredictable mistakes, and different people will interpret guidelines in different ways.

2.2 Tokenizing

The smallest parts of transcriptions as described in par. 2 are generally larger than single words: speech is usually transcribed to sentences, or fragments of a certain maximum length (10 seconds in the SAND, for example). But if you want to attach metadata (e.g. lemmata, POS-tagging) to individual words it is necessary to split the fragments from your transcription program into individual words. And this is an important reason to keep transcription protocols simple and to keep metadata separate from transcriptions: you want, basically, to be able to split your text on whitespace, disregard punctuation and end up with a list of tokens; encoded metadata in the text (which should be disregarded or handled in a special way while tokenizing) is an extra complication and increases the possibility of errors.

3. SAND problems and solutions

3.1 Encoded metadata in transcripts

The SAND transcripts used codes for questions and answers in the transcripts itself, instead of in some kind of separate metadata layer. So questions were numbered like this:

[v=067] Text of question as spoken [/v]

Affirmative answers where coded like this:

[a=j] Text of answer as spoken [/a]

And negative answers like this:

[a=n] Text of answer as spoken [/a]

There are a couple of problems with this approach. First, correct demarcation of metadata and data is dependent on the transcriber not making typing errors. Variants like

[v=067 Text of question [/v]

or

[a=j[ Text of answer [/a]

just to name a few, complicate correct parsing of the transcript considerably.

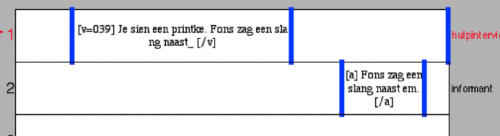

Also note that the questions are numbered, but the answers are not. The numbers are left implicit. This sort of shorthand is generally not a good idea. It is understandable, though: if you observe the following fragment of a screenshot of the transcription program PRAAT:

it is, for a human who views the screen, obvious to which question the answer in the informant tier belongs. But -- and this is the crux here -- such 'visual' relationships often do not have a counterpart in the underlying data structures; they are 'visual only', and very hard to figure out for a computer program which has to parse the transcript files. So the lesson here is: keep relationships like this explicit if you want to work with your data outside of your transcription program.

Another example, which caused a lot of trouble in converting the SAND transcript files to database tables, are the so-called 'clusters'. This is a snippet of informant text, an interval, from the Flemish municipality of Aalter:

[a=j] k weet datij zal moete were keren. [/a]

("I know that he will have to return").

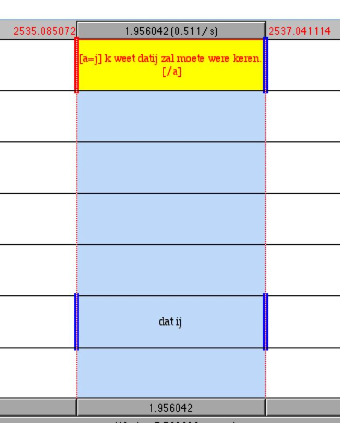

This interval has an interesting twist: one of the words is a cluster. datij is, in the cluster tier, interpreted as consisting of two words: dat hij ("that he"). In PRAAT, this looks like this:

For a human who is looking at the screen, it is obvious that dat ij in the cluster tier belongs to datij in the informant1 tier. However, this is not necessarily the case for a computer program. This particular interval is a good illustration for the heuristic we used for connecting split clusters to words in the transcript, which roughly amounts to "if you find something in the cluster tier, then find the interval in the interview which is 'directly above' (i.e. which has the same start- and endtime as the current cluster interval) and look in there for a string which is equal to the words of the cluster tier put together". In this case, datij is found without problems. But in many cases, things got rather error-prone: there could be more than one cluster in the same interval in the cluster tier, hence it was not clear (again, to a computer program) where one cluster ended and the next began; there could be more than one interval "above" the cluster interval (i.e. intervals from different tiers); sometimes, the begin- and endtimes of interview and cluster interval did not match exactly, which meant that it was difficult to decide if an interval was "above" the cluster interval at all; and often the spelling of the parts of the cluster was different from the cluster itself, e.g. datij represented in the cluster tier as dat hij. All in all, a lot of complicated code was needed to try and recognize clusters, and a lot of time-consuming manual correcting was needed afterwards.

It would have been much better if the transcription program itself was aware of these clusters, meaning, if the relationships between words and clusters was reflected in the underlying transcript files. I would advise anyone to get familiar with the feature set of their transcription programs, use these features to the fullest, and stay within the existing feature set as much as possible. If you really need to do something which cannot natively be done by your transcription program, think hard about ways to minimise the possibility of errors. Assume that errors will occur and plan accordingly. Keep things simple, and meanings explicit. Make sure typos are catched in correction rounds.

4. Storage

There are a couple of different ways to store and search textual corpora. In the SAND project we put everything directly in a relational database and use its search features. Another possibility would be to store your corpus as a text file and use a dedicated full text search engine (for instance: Lucene, a general one; or one that is specifically meant for linguistic corpora like IMS Corpus Workbench) to index and search it.

It is also possible to store your corpus as an XML file and use standard XML tools (XPath, XQuery) to search it. A downside of this is that these kind of searches don't use indexing so they are quite slow compared to database or text search engine solutions. However, if your corpus is small enough that need not be a problem.

In our view, one of the nice things about using a relational database for storage is that (if the database is properly normalized and the information is stored in small parts) it is relatively easy to generate other formats from it. For example, generating XML from such a database is quite doable; the reverse (generating a database from XML files) is a lot more work.

Another advantage of using a relational database is that, as long as you use a storage engine which supports foreign key constraints and transactions, you can use the database as a safeguard for the integrity of your data.

4.1 Foreign key constraints

Say, for example, that you have a database table with all the words in your corpus, each word having a unique id, and another table with PoS tagging, which is linked to the word table by the unique word id. E.g.: word id 12345 has PoS tag V(-e,end2,inf,mod). If your database supports foreign keys, you can make it so that it is not possible to enter a non-existing word id in the PoS table. All id's in the PoS table are then guaranteed to exist in the word table. In the SAND we use the InnoDB storage engine for MySQL, which supports this feature.

4.2 Transactions

The InnoDB storage engine also supports transactions. This basically means that it is possible to execute a group of statements as a whole: either all of the statements succeed, or all fail, so that the database is never in an inconsistent state. The canonical example for this comes from the banking world: transfer of an amount of money from account A to account B consists of (1) subtracting the amount from account A; (2) adding the amount to account B. Executing step 1 and not step 2 would leave the system in an inconsistent state: the money dissappeared. So both steps should be wrapped in a transaction: if something goes wrong after step 1, this step is 'rolled back' and the system is back where it started. Only of step 1 and step 2 both succeed, the transfer is 'committed' and made final.

In the SAND we use this feature in the tagging part of the application. Tagging involves storing categories and attributes in different tables. You don't want to store 'half a tag', so the group of statements which saves the tagging for a word is wrapped in a transaction.

4.3 XML as an extra option

We understand that many researchers prefer to work with XML files directly, and that for long-time archival storage of linguistic data a binary database file is probably not a good idea. That is why we are currently (May 2007) in the process of generating TEI-encoded XML files of the interviews from the SAND project; these will be available for download in the near future.

5. Syntactic tagging

Future project.